Puang, En Yen; Li, Zechen; Chew, Chee Meng; Luo, Shan; Wu, Yan Learning Stable Robot Grasping with Transformer-based Tactile Control Policies Authors Honorable Mention Proceedings Article Forthcoming In: 2024 IEEE 19th Conference on Industrial Electronics and Applications (ICIEA), Forthcoming. Zhu, Wenxin; Liang, Wenyu; Ren, Qinyuan; Wu, Yan Gaussian Process Model Predictive Admittance Control for Compliant Tool-Environment Interaction Honorable Mention Proceedings Article Forthcoming In: 2024 IEEE 19th Conference on Industrial Electronics and Applications (ICIEA), Forthcoming. Marchesi, Serena; Tommaso, Davide De; Kompatsiari, Kyveli; Wu, Yan; Wykowska, Agnieszka Tools and methods to study and replicate experiments addressing human social cognition in interactive scenarios Journal Article In: Behavior Research Methods, vol. 56, pp. 7543–7560, 2024. Zhao, Xinyuan; Liang, Wenyu; Zhang, Xiaoshi; Chew, Chee Meng; Wu, Yan Unknown Object Retrieval in Confined Space through Reinforcement Learning with Tactile Exploration Proceedings Article In: 2024 IEEE International Conference on Robotics and Automation (ICRA), pp. 10881-10887, IEEE, 2024, ISBN: 979-8-3503-8457-4. Acar, Cihan; Binici, Kuluhan; Tekirdağ, Alp; Wu, Yan Visual-Policy Learning through Multi-Camera View to Single-Camera View Knowledge Distillation for Robot Manipulation Tasks Journal Article In: IEEE Robotics and Automation Letters, pp. 1-8, 2023. Marchesi, Serena; Abubshait, Abdulaziz; Kompatsiari, Kyveli; Wu, Yan; Wykowska, Agnieszka Cultural differences in joint attention and engagement in mutual gaze with a robot face Journal Article In: Scientific Reports, vol. 13, iss. 11689, 2023. Sui, Xiuchao; Li, Shaohua; Yang, Hong; Zhu, Hongyuan; Wu, Yan Language Models can do Zero-Shot Visual Referring Expression Comprehension Proceedings Article In: Eleventh International Conference on Learning Representations (ICLR) 2023, 2023. Zandonati, Ben; Wang, Ruohan; Gao, Ruihan; Wu, Yan Investigating vision foundational models for tactile representation learning Working paper 2023. Zhang, Hanwen; Lu, Zeyu; Liang, Wenyu; Yu, Haoyong; Mao, Yao; Wu, Yan Interaction Control for Tool Manipulation on Deformable Objects Using Tactile Feedback Journal Article In: IEEE Robotics and Automation Letters, vol. 8, iss. 5, pp. 2700 - 2707, 2023, ISSN: 2377-3766. Tian, Daiying; Fang, Hao; Yang, Qingkai; Yu, Haoyong; Liang, Wenyu; Wu, Yan Reinforcement learning under temporal logic constraints as a sequence modeling problem Journal Article In: Robotics and Autonomous Systems, vol. 161, pp. 104351, 2023, ISSN: 0921-8890. Wang, Haodong; Liang, Wenyu; Liang, Boyuan; Ren, Hongliang; Du, Zhijiang; Wu, Yan Robust Position Control of a Continuum Manipulator Based on Selective Approach and Koopman Operator Journal Article In: IEEE Transactions on Industrial Electronics, 2023, ISSN: 0278-0046. Liang, Wenyu; Fang, Fen; Acar, Cihan; Toh, Wei Qi; Sun, Ying; Xu, Qianli; Wu, Yan Visuo-Tactile Feedback-Based Robot Manipulation for Object Packing Journal Article In: IEEE Robotics and Automation Letters, vol. 8, iss. 2, pp. 1151 - 1158, 2023, ISSN: 2377-3766. Wang, Tianying; Puang, En Yen; Lee, Marcus; Wu, Yan; Jing, Wei End-to-end Reinforcement Learning of Robotic Manipulation with Robust Keypoints Representation Proceedings Article In: 2022 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 2022, ISBN: 978-616-590-477-3. Liang, Boyuan; Liang, Wenyu; Wu, Yan Tactile-Guided Dynamic Object Planar Manipulation Proceedings Article In: 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3203-3209, IEEE, 2022, ISBN: 978-1-6654-7927-1. Fang, Fen; Liang, Wenyu; Wu, Yan; Xu, Qianli; Lim, Joo-Hwee Improving Generalization of Reinforcement Learning Using a Bilinear Policy Network Proceedings Article In: 2022 IEEE International Conference on Image Processing (ICIP), pp. 991-995, IEEE, 2022, ISBN: 978-1-6654-9620-9. Tian, Daiying; Fang, Hao; Yang, Qingkai; Guo, Zixuan; Cui, Jinqiang; Liang, Wenyu; Wu, Yan Two-Phase Motion Planning under Signal Temporal Logic Specifications in Partially Unknown Environments Journal Article In: IEEE Transactions on Industrial Electronics, 2022, ISSN: 0278-0046. Fang, Fen; Liang, Wenyu; Wu, Yan; Xu, Qianli; Lim, Joo Hwee Self-Supervised Reinforcement Learning for Active Object Detection Proceedings Article In: 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 10224-10231, IEEE, 2022, ISSN: 2377-3766. Sui, Xiuchao; Li, Shaohua; Geng, Xue; Wu, Yan; Xu, Xinxing; Liu, Yong; Goh, Rick; Zhu, Hongyuan CRAFT: Cross-Attentional Flow Transformers for Robust Optical Flow Proceedings Article In: 2022 Conference on Computer Vision and Pattern Recognition (CVPR), 2022. Diao, Qi; Dai, Yaping; Zhang, Ce; Wu, Yan; Feng, Xiaoxue; Pan, Feng Superpixel-based attention graph neural network for semantic segmentation in aerial images Journal Article In: Remote Sensing, vol. 14, no. 2, 2022. Liang, Boyuan; Liang, Wenyu; Wu, Yan Parameterized Particle Filtering for Tactile-based Simultaneous Pose and Shape Estimation Journal Article In: IEEE Robotics and Automation Letters (RA-L), vol. 7, no. 2, pp. 1270-1277, 2021, ISSN: 2377-3766, (also accepted by ICRA 2022). Gauthier, Nicolas; Liang, Wenyu; Xu, Qianli; Fang, Fen; Li, Liyuan; Gao, Ruihan; Wu, Yan; Lim, Joo Hwee Towards a Programming-Free Robotic System for Assembly Tasks Using Intuitive Interactions Conference The 13th International Conference on Social Robotics (ICSR 2021), vol. 13086, Lecture Notes in Computer Science Springer, 2021, ISBN: 978-3-030-90525-5, (Best Presentation Award). Marchesi, Serena; Davide, De Tommaso; Perez-Osorio, Jairo; Tan, Ming Min; Hoh, Ching Ru; Wu, Yan; Wykowska, Agnieszka The consequences of being human-like – an intercultural study Workshop IROS 2021 Workshop on Human-like Behavior and Cognition in Robots, 2021. Gao, Ruihan; Tian, Tian; Lin, Zhiping; Wu, Yan On Explainability and Sensor-Adaptability of a Robot Tactile Texture Representation Using a Two-Stage Recurrent Networks Proceedings Article In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) , 2021. Xu, Qianli; Fang, Fen; Gauthier, Nicolas; Liang, Wenyu; Wu, Yan; Li, Liyuan; Lim, Joo Hwee Towards Efficient Multiview Object Detection with Adaptive Action Prediction Proceedings Article In: 2021 IEEE International Conference on Robotics and Automation (ICRA), IEEE, 2021, ISBN: 978-1-7281-9077-8. Liang, Wenyu; Ren, Qinyuan; Chen, Xiaoqiao; Gao, Junli; Wu, Yan Dexterous Manoeuvre through Touch in a Cluttered Scene Proceedings Article In: 2021 IEEE International Conference on Robotics and Automation (ICRA), IEEE, 2021, ISBN: 978-1-7281-9077-8. Xu, Qianli; Gauthier, Nicolas; Liang, Wenyu; Fang, Fen; Tan, Hui Li; Sun, Ying; Wu, Yan; Li, Liyuan; Lim, Joo Hwee TAILOR: Teaching with Active and Incremental Learning for Object Registration Proceedings Article In: Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI), pp. 16120-16123, AAAI, 2021, (AAAI'21 Best Demo Award). Li, Dongyu; Yu, Haoyong; Tee, Keng Peng; Wu, Yan; Ge, Shuzhi Sam; Lee, Tong Heng On Time-Synchronized Stability and Control Journal Article In: IEEE Transactions on Systems, Man, and Cybernetics: Systems, pp. 1-14, 2021, ISSN: 2168-2216. Cheng, Yi; Zhu, Hongyuan; Sun, Ying; Acar, Cihan; Jing, Wei; Wu, Yan; Li, Liyuan; Tan, Cheston; Lim, Joo Hwee 6D Pose Estimation with Correlation Fusion Proceedings Article In: 25th International Conference on Pattern Recognition (ICPR), pp. 2988-2994, Milan, Italy, 2021, ISBN: 978-1-7281-8808-9. Gao, Ruihan; Taunyazov, Tasbolat; Lin, Zhiping; Wu, Yan Supervised Autoencoder Joint Learning on Heterogeneous Tactile Sensory Data: Improving Material Classification Performance Proceedings Article In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, USA, 2020, ISBN: 978-1-7281-6212-6. Liang, Wenyu; Feng, Zhao; Wu, Yan; Gao, Junli; Ren, Qinyuan; Lee, Tong Heng Robust Force Tracking Impedance Control of an Ultrasonic Motor-actuated End-effector in a Soft Environment Proceedings Article In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, USA, 2020. Taunyazov, Tasbolat; Chua, Yansong; Gao, Ruihan; Soh, Harold; Wu, Yan Fast Texture Classification Using Tactile Neural Coding and Spiking Neural Network Proceedings Article In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, USA, 2020. Jing, Wei; Deng, Di; Wu, Yan; Shimada, Kenji Multi-UAV Coverage Path Planning for the Inspection of Large and Complex Structures via Path Primitive Sampling Proceedings Article In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, USA, 2020. Cai, Caixia; Liang, Ying Siu; Somani, Nikhil; Wu, Yan Inferring the Geometric Nullspace of Robot Skills from Human Demonstrations Proceedings Article In: 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 7668-7675, IEEE, Paris, France, 2020, ISSN: 2577-087X. Chi, Haozhen; Li, Xuefang; Liang, Wenyu; Wu, Yan; Ren, Qinyuan Motion Control of a Soft Circular Crawling Robot via Iterative Learning Control Proceedings Article In: 2019 IEEE 58th Conference on Decision and Control (CDC), pp. 6524-6529, IEEE, Nice, France, 2019, ISBN: 978-1-7281-1398-2. Wang, Tianying; Zhang, Hao; Toh, Wei Qi; Zhu, Hongyuan; Tan, Cheston; Wu, Yan; Liu, Yong; Jing, Wei Efficient Robotic Task Generalization Using Deep Model Fusion Reinforcement Learning Proceedings Article In: 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), pp. 148-153, IEEE, Dali, China, 2019, ISBN: 978-1-7281-6321-5. Pang, Jiangnan; Shao, Yibo; Chi, Haozhen; Wu, Yan Modeling and Control of A Soft Circular Crawling Robot Proceedings Article In: The 45th Annual Conference of the IEEE Industrial Electronics Society (IECON), pp. 5243-5248, IEEE, Lisbon, Portugal, 2019, ISBN: 978-1-7281-4878-6. Cai, Siqi; Chen, Yan; Huang, Shuangyuan; Wu, Yan; Zheng, Haiqing; Li, Xin; Xie, Longhan SVM-Based Classification of sEMG Signals for Upper-Limb Self-Rehabilitation Training Journal Article In: Frontiers in Neurorobotics, vol. 13, pp. 31, 2019, ISSN: 1662-5218. Taunyazov, Tasbolat; Koh, Hui Fang; Wu, Yan; Cai, Caixia; Soh, Harold Towards Effective Tactile Identification of Textures using a Hybrid Touch Approach Proceedings Article In: 2019 International Conference on Robotics and Automation (ICRA), pp. 4269-4275, IEEE, Montreal, Canada, 2019, ISBN: 978-1-5386-6027-0. You, Yangwei; Cai, Caixia; Wu, Yan 3D Visibility Graph Based Motion Planning and Control Proceedings Article In: The 2019 5th International Conference on Robotics and Artificial Intelligence (ICRAI), pp. 48–53, ACM, Singapore, 2019, ISBN: 9781450372350. Wong, Clarice Jiaying; Tay, Yong Ling; Lew, Lincoln W C; Koh, Hui Fang; Xiong, Yijing; Wu, Yan Advbot: Towards Understanding Human Preference in a Human-Robot Interaction Scenario Proceedings Article In: The 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), pp. 1305-1309, IEEE, Singapore, 2018. Tee, Keng Peng; Wu, Yan Experimental Evaluation of Divisible Human-Robot Shared Control for Teleoperation Assistance Proceedings Article In: 2018 IEEE Region 10 Conference (TENCON), pp. 0182-0187, IEEE, Jeju, South Korea, 2018, ISBN: 978-1-5386-5457-6. Wu, Yan; Wang, Ruohan; D'Haro, Luis Fernando.; Banchs, Rafael E; Tee, Keng Peng Multi-Modal Robot Apprenticeship: Imitation Learning Using Linearly Decayed DMP+ in a Human-Robot Dialogue System Proceedings Article In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1-7, IEEE, Madrid, Spain, 2018, ISBN: 978-1-5386-8094-0. Wu, Yan; Wang, Ruohan; Tay, Yong Ling; Wong, Clarice Jiaying Investigation on the Roles of Human and Robot in Collaborative Storytelling Proceedings Article In: 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), pp. 063-068, IEEE, Kuala Lumpur, Malaysia, 2017, ISBN: 978-1-5386-1542-3. Volpi, Nicola Catenacci; Wu, Yan; Ognibene, Dimitri Towards Event-based MCTS for Autonomous Cars Proceedings Article In: 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), pp. 420-427, IEEE, Kuala Lumpur, Malaysia, 2017, ISBN: 978-1-5386-1542-3. Bu, Fan; Wu, Yan Towards a Human-Robot Teaming System for Exploration of Environment Proceedings Article In: 2017 International Conference on Orange Technologies (ICOT), pp. 1-6, IEEE, Singapore, 2017, ISBN: 978-1-5386-3276-5. Su, Yanyu; Wu, Yan; Gao, Yongzhuo; Dong, Wei; Sun, Yixuan; Du, Zhijiang An Upper Limb Rehabilitation System with Motion Intention Detection Proceedings Article In: The 2nd International Conference on Advanced Robotics and Mechatronics (ICARM), pp. 510-516, IEEE, Hefei, China, 2017, ISBN: 978-1-5386-3260-4. Ko, Wilson Kien Ho; Wu, Yan; Tee, Keng Peng LAP: A Human-in-the-loop Adaptation Approach for Industrial Robots Proceedings Article In: The Fourth International Conference on Human Agent Interaction (HAI), pp. 313–319, ACM, Singapore, 2016, ISBN: 978-1-4503-4508-8. Shen, Zhuoyu; Wu, Yan Investigation of Practical Use of Humanoid Robots in Elderly Care Centres Proceedings Article In: The Fourth International Conference on Human Agent Interaction (HAI), pp. 63–66, ACM, Singapore, 2016, ISBN: 978-1-4503-4508-8. Wang, Ruohan; Wu, Yan; Chan, Wei Liang; Tee, Keng Peng Dynamic Movement Primitives Plus: For enhanced reproduction quality and efficient trajectory modification using truncated kernels and Local Biases Proceedings Article In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3765-3771, IEEE, Daejeon, South Korea, 2016, ISBN: 978-1-5090-3762-9. Li, Yanan; Tee, Keng Peng; Yan, Rui; Chan, Wei Liang; Wu, Yan A Framework of Human-Robot Coordination Based on Game Theory and Policy Iteration Journal Article In: IEEE Transactions on Robotics, vol. 32, no. 6, pp. 1408-1418, 2016, ISSN: 1552-3098.2024

@inproceedings{puang2024learning,

title = {Learning Stable Robot Grasping with Transformer-based Tactile Control Policies Authors},

author = {En Yen Puang and Zechen Li and Chee Meng Chew and Shan Luo and Yan Wu},

url = {https://arxiv.org/abs/2407.21172},

year = {2024},

date = {2024-08-05},

urldate = {2024-08-05},

booktitle = {2024 IEEE 19th Conference on Industrial Electronics and Applications (ICIEA)},

abstract = {Measuring grasp stability is an important skill for dexterous robot manipulation tasks, which can be inferred from haptic information with a tactile sensor. Control policies have to detect rotational displacement and slippage from tactile feedback, and determine a re-grasp strategy in term of location and force. Classic stable grasp task only trains control policies to solve for re-grasp location with objects of fixed center of gravity. In this work, we propose a revamped version of stable grasp task that optimises both re-grasp location and gripping force for objects with unknown and moving center of gravity. We tackle this task with a model-free, end-to-end Transformer-based reinforcement learning framework. We show that our approach is able to solve both objectives after training in both simulation and in a real-world setup with zero-shot transfer. We also provide performance analysis of different models to understand the dynamics of optimizing two opposing objectives.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

@inproceedings{zhu2024gaussian,

title = {Gaussian Process Model Predictive Admittance Control for Compliant Tool-Environment Interaction},

author = {Wenxin Zhu and Wenyu Liang and Qinyuan Ren and Yan Wu},

year = {2024},

date = {2024-08-05},

urldate = {2024-08-05},

booktitle = {2024 IEEE 19th Conference on Industrial Electronics and Applications (ICIEA)},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

@article{marchesi2024tools,

title = {Tools and methods to study and replicate experiments addressing human social cognition in interactive scenarios},

author = {Serena Marchesi and Davide De Tommaso and Kyveli Kompatsiari and Yan Wu and Agnieszka Wykowska},

url = {https://link.springer.com/article/10.3758/s13428-024-02434-z},

doi = {10.3758/s13428-024-02434-z},

year = {2024},

date = {2024-05-23},

journal = {Behavior Research Methods},

volume = {56},

pages = {7543–7560},

abstract = {In the last decade, scientists investigating human social cognition have started bringing traditional laboratory paradigms more “into the wild” to examine how socio-cognitive mechanisms of the human brain work in real-life settings. As this implies transferring 2D observational paradigms to 3D interactive environments, there is a risk of compromising experimental control. In this context, we propose a methodological approach which uses humanoid robots as proxies of social interaction partners and embeds them in experimental protocols that adapt classical paradigms of cognitive psychology to interactive scenarios. This allows for a relatively high degree of “naturalness” of interaction and excellent experimental control at the same time. Here, we present two case studies where our methods and tools were applied and replicated across two different laboratories, namely the Italian Institute of Technology in Genova (Italy) and the Agency for Science, Technology and Research in Singapore. In the first case study, we present a replication of an interactive version of a gaze-cueing paradigm reported in Kompatsiari et al. (J Exp Psychol Gen 151(1):121–136, 2022). The second case study presents a replication of a “shared experience” paradigm reported in Marchesi et al. (Technol Mind Behav 3(3):11, 2022). As both studies replicate results across labs and different cultures, we argue that our methods allow for reliable and replicable setups, even though the protocols are complex and involve social interaction. We conclude that our approach can be of benefit to the research field of social cognition and grant higher replicability, for example, in cross-cultural comparisons of social cognition mechanisms.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@inproceedings{zhao2024unknown,

title = {Unknown Object Retrieval in Confined Space through Reinforcement Learning with Tactile Exploration},

author = {Xinyuan Zhao and Wenyu Liang and Xiaoshi Zhang and Chee Meng Chew and Yan Wu},

url = {https://ieeexplore.ieee.org/document/10611541},

doi = {10.1109/ICRA57147.2024.10611541},

isbn = {979-8-3503-8457-4},

year = {2024},

date = {2024-05-13},

booktitle = {2024 IEEE International Conference on Robotics and Automation (ICRA)},

pages = {10881-10887},

publisher = {IEEE},

abstract = {The potential of tactile sensing for dexterous robotic manipulation has been demonstrated by its ability to enable nuanced real-world interactions. In this study, the retrieval of unknown objects from confined spaces, which is unsuitable for conventional visual perception and gripper-based manipulation, is identified and addressed. Specifically, a tactile-sensorized tool stick that well fits in the narrow space is utilized to provide multi-point contact sensing for object manipulation. A reinforcement learning (RL) agent with a hybrid action space is then proposed to acquire the optimal policy for manipulating the objects without prior knowledge of their physical properties. To accelerate on-hardware training, a focused training strategy is adopted with the hypothesis that an agent trained on a small set of representative shapes can be generalized to a wide range of everyday objects. Additionally, a curriculum on terminal goals is designed to further accelerate the hardware-based training process. Comparative experiments and ablation studies have been conducted to evaluate the effectiveness and robustness of the proposed approach, which highlights the high success rate of our solution for retrieving everyday objects.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2023

@article{acar2023visual,

title = {Visual-Policy Learning through Multi-Camera View to Single-Camera View Knowledge Distillation for Robot Manipulation Tasks},

author = {Cihan Acar and Kuluhan Binici and Alp Tekirdağ and Yan Wu},

url = {ieeexplore.ieee.org/document/10327777},

doi = {10.1109/LRA.2023.3336245},

year = {2023},

date = {2023-11-23},

urldate = {2023-11-23},

journal = {IEEE Robotics and Automation Letters},

pages = {1-8},

abstract = {The use of multi-camera views simultaneously has been shown to improve the generalization capabilities and performance of visual policies. However, using multiple cameras in real-world scenarios can be challenging. In this study, we present a novel approach to enhance the generalization performance of vision-based Reinforcement Learning (RL) algorithms for robotic manipulation tasks. Our proposed method involves utilizing a technique known as knowledge distillation, in which a “teacher” policy, pre-trained with multiple camera viewpoints, guides a “student” policy in learning from a single camera viewpoint. To enhance the student policy's robustness against camera location perturbations, it is trained using data augmentation and extreme viewpoint changes. As a result, the student policy learns robust visual features that allow it to locate the object of interest accurately and consistently, regardless of the camera viewpoint. The efficacy and efficiency of the proposed method were evaluated in both simulation and real-world environments. The results demonstrate that the single-view visual student policy can successfully learn to grasp and lift a challenging object, which was not possible with a single-view policy alone. Furthermore, the student policy demonstrates zero-shot transfer capability, where it can successfully grasp and lift objects in real-world scenarios for unseen visual configurations [Video attachment: https://youtu.be/CnDQK9ly5eg]},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@article{marchesi2023cultural,

title = {Cultural differences in joint attention and engagement in mutual gaze with a robot face},

author = {Serena Marchesi and Abdulaziz Abubshait and Kyveli Kompatsiari and Yan Wu and Agnieszka Wykowska},

url = {https://yan-wu.com/wp-content/uploads/2023/07/marchesi2023cultural.pdf},

doi = {10.1038/s41598-023-38704-7},

year = {2023},

date = {2023-07-19},

urldate = {2023-07-19},

journal = {Scientific Reports},

volume = {13},

issue = {11689},

abstract = {Joint attention is a pivotal mechanism underlying human ability to interact with one another. The fundamental nature of joint attention in the context of social cognition has led researchers to develop tasks that address this mechanism and operationalize it in a laboratory setting, in the form of a gaze cueing paradigm. In the present study, we addressed the question of whether engaging in joint attention with a robot face is culture-specific. We adapted a classical gaze-cueing paradigm such that a robot avatar cued participants’ gaze subsequent to either engaging participants in eye contact or not. Our critical question of interest was whether the gaze cueing effect (GCE) is stable across different cultures, especially if cognitive resources to exert top-down control are reduced. To achieve the latter, we introduced a mathematical stress task orthogonally to the gaze cueing protocol. Results showed larger GCE in the Singapore sample, relative to the Italian sample, independent of gaze type (eye contact vs. no eye contact) or amount of experienced stress, which translates to available cognitive resources. Moreover, since after each block, participants rated how engaged they felt with the robot avatar during the task, we observed that Italian participants rated as more engaging the avatar during the eye contact blocks, relative to no eye contact while Singaporean participants did not show any difference in engagement relative to the gaze. We discuss the results in terms of cultural differences in robot-induced joint attention, and engagement in eye contact, as well as the dissociation between implicit and explicit measures related to processing of gaze.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@inproceedings{language2023sui,

title = {Language Models can do Zero-Shot Visual Referring Expression Comprehension},

author = {Xiuchao Sui and Shaohua Li and Hong Yang and Hongyuan Zhu and Yan Wu},

url = {https://openreview.net/forum?id=F7mdgA7c2zD},

year = {2023},

date = {2023-05-01},

urldate = {2023-05-01},

booktitle = {Eleventh International Conference on Learning Representations (ICLR) 2023},

series = {Tiny Paper},

abstract = {The use of visual referring expressions is an important aspect of human-robot interactions. Comprehending referring expressions (ReC) like ``the brown cookie near the cup'' requires to understand both self-referential expressions, ``brown cookie", and relational referential expressions, ``near the cup''. Large pretrained Vision-Language models like CLIP excel at handling self-referential expressions, while struggle with the latter. In this work, we reframe ReC as a language reasoning task and explore whether it can be addressed using large pretrained language models (LLMs), including Bing Chat and ChatGPT. Given the textual attribute tuples {object category, color, center location, size}, ChatGPT performs unstably on understanding spatial relationships even with heavy prompt engineering, while Bing Chat shows strong and stable zero-shot relation reasoning. Evaluation on RefCOCO/g datasets and scenarios of interactive robot grasping shows that LLMs can do ReC with decent performance. It suggests a vast potential of using LLMs to enhance the reasoning in vision tasks.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@workingpaper{zandonati2023investigating,

title = {Investigating vision foundational models for tactile representation learning},

author = {Ben Zandonati and Ruohan Wang and Ruihan Gao and Yan Wu},

url = {https://arxiv.org/abs/2305.00596},

year = {2023},

date = {2023-04-30},

abstract = {Tactile representation learning (TRL) equips robots with the ability to leverage touch information, boosting performance in tasks such as environment perception and object manipulation. However, the heterogeneity of tactile sensors results in many sensor- and task-specific learning approaches. This limits the efficacy of existing tactile datasets, and the subsequent generalisability of any learning outcome. In this work, we investigate the applicability of vision foundational models to sensor-agnostic TRL, via a simple yet effective transformation technique to feed the heterogeneous sensor readouts into the model. Our approach recasts TRL as a computer vision (CV) problem, which permits the application of various CV techniques for tackling TRL-specific challenges. We evaluate our approach on multiple benchmark tasks, using datasets collected from four different tactile sensors. Empirically, we demonstrate significant improvements in task performance, model robustness, as well as cross-sensor and cross-task knowledge transferability with limited data requirements.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

@article{interaction2023zhang,

title = {Interaction Control for Tool Manipulation on Deformable Objects Using Tactile Feedback},

author = {Hanwen Zhang and Zeyu Lu and Wenyu Liang and Haoyong Yu and Yao Mao and Yan Wu},

doi = {10.1109/LRA.2023.3257680},

issn = {2377-3766},

year = {2023},

date = {2023-03-15},

journal = {IEEE Robotics and Automation Letters},

volume = {8},

issue = {5},

pages = {2700 - 2707},

abstract = {The human sense of touch enables us to perform delicate tasks on deformable objects and/or in a vision-denied environment. To achieve similar desirable interactions for robots, such as administering a swab test, tactile information sensed beyond the tool-in-hand is crucial for contact state estimation and contact force control. In this letter, a tactile-guided planning and control framework using GTac, a hetero G eneous Tac tile sensor tailored for interaction with deformable objects beyond the immediate contact area, is proposed. The biomimetic GTac in use is an improved version optimized for readout linearity, which provides reliability in contact state estimation and force tracking. A tactile-based classification and manipulation process is designed to estimate and align the contact angle between the tool and the environment. Moreover, a Koopman operator-based optimal control scheme is proposed to address the challenges in nonlinear control arising from the interaction with the deformable object. Finaly, several experiments are conducted to verify the effectiveness of the proposed framework. The experimental results demonstrate that the proposed framework can accurately estimate the contact angle as well as achieve excellent tracking performance and strong robustness in force control.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@article{tian2023reinforcement,

title = {Reinforcement learning under temporal logic constraints as a sequence modeling problem},

author = {Daiying Tian and Hao Fang and Qingkai Yang and Haoyong Yu and Wenyu Liang and Yan Wu},

url = {https://yan-wu.com/wp-content/uploads/2024/11/tian2023reinforcement.pdf},

doi = {10.1016/j.robot.2022.104351},

issn = {0921-8890},

year = {2023},

date = {2023-03-01},

urldate = {2023-03-01},

journal = {Robotics and Autonomous Systems},

volume = {161},

pages = {104351},

abstract = {Reinforcement learning (RL) under temporal logic typically suffers from slow propagation for credit assignment. Inspired by recent advancements called trajectory transformer in machine learning, the reinforcement learning under Temporal Logic (TL) is modeled as a sequence modeling problem in this paper, where an agent utilizes the transformer to fit the optimal policy satisfying the Finite Linear Temporal Logic (LTLf) tasks. To combat the sparse reward issue, dense reward functions for LTLf are designed. For the sake of reducing the computational complexity, a sparse transformer with local and global attention is constructed to automatically conduct credit assignment, which removes the time-consuming value iteration process. The optimal action is found by the beam search performed in transformers. The proposed method generates a series of policies fitted by sparse transformers, which has sustainably high accuracy in fitting the demonstrations. At last, the effectiveness of the proposed method is demonstrated by simulations in Mini-Grid environments.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@article{robust2023wang,

title = {Robust Position Control of a Continuum Manipulator Based on Selective Approach and Koopman Operator},

author = {Haodong Wang and Wenyu Liang and Boyuan Liang and Hongliang Ren and Zhijiang Du and Yan Wu},

doi = {10.1109/TIE.2023.3236082},

issn = {0278-0046},

year = {2023},

date = {2023-01-17},

journal = {IEEE Transactions on Industrial Electronics},

abstract = {Continuum manipulators have infinite degrees of freedom and high flexibility, making it challenging for accurate modeling and control. Some common modeling methods include mechanical modeling strategy, neural network strategy, constant curvature assumption, etc. However, the inverse kinematics of the mechanical modeling strategy is difficult to obtain while a strategy using neural networks may not converge in some applications. For algorithm implementation, the constant curvature assumption is used as the basis to design the controller. When the driving wire is tight, the linear controller under constant curvature assumption works well in manipulator position control. However, this assumption of linearity between the deformation angle and the driving input value breaks upon repeated use of the driving wires which get inevitably lengthened. This degrades the accuracy of the controller. In this work, the Koopman theory is proposed to identify the nonlinear model of the continuum manipulator. Under the linearized model, the control input is obtained through model predictive control (MPC). As the lifted function can affect the effectiveness of the Koopman operator-based MPC (K-MPC), a novel design method of the lifted function through the Legendre polynomial is proposed. To attain higher control efficiency and computational accuracy, a selective control scheme according to the state of the driving wires is proposed. When the driving wire is tight, the linear controller is employed; otherwise, the K-MPC is adopted. Finally, a set of static and dynamic experiments has been conducted using an experimental prototype. The results demonstrate high effectiveness and good performance of the selective control scheme.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@article{visuo2023liang,

title = {Visuo-Tactile Feedback-Based Robot Manipulation for Object Packing},

author = {Wenyu Liang and Fen Fang and Cihan Acar and Wei Qi Toh and Ying Sun and Qianli Xu and Yan Wu},

doi = {10.1109/LRA.2023.3236884},

issn = {2377-3766},

year = {2023},

date = {2023-01-13},

journal = {IEEE Robotics and Automation Letters},

volume = {8},

issue = {2},

pages = {1151 - 1158},

abstract = {Robots are increasingly expected to manipulate objects, of which properties have high perceptual uncertainty from any single sensory modality. This directly impacts successful object manipulation. Object packing is one of the challenging tasks in robot manipulation. In this work, a new visuo-tactile feedback-based manipulation planning framework for object packing is proposed, which makes use of the on-the-fly multisensory feedback and an attention-guided deep affordance model as perceptual states as well as a deep reinforcement learning (DRL) pipeline. Significantly, multiple sensory modalities, vision and touch [tactile and force/torque (F/T)], are employed in predicting and indicating the manipulable regions of multiple affordances (i.e., graspability and pushability) for objects with similar appearances but different intrinsic properties (e.g., mass distribution). To improve the manipulation efficiency, the DRL algorithm is trained to select the optimal actions for successful object manipulation. The proposed method is evaluated on both an open dataset and our collected dataset and demonstrated in the use case of the object packing task. The results show that the proposed method outperforms the existing methods and achieves better accuracy with much higher efficiency.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2022

@inproceedings{wang2022end,

title = {End-to-end Reinforcement Learning of Robotic Manipulation with Robust Keypoints Representation},

author = {Tianying Wang and En Yen Puang and Marcus Lee and Yan Wu and Wei Jing},

doi = {10.23919/APSIPAASC55919.2022.9980136},

isbn = {978-616-590-477-3},

year = {2022},

date = {2022-11-10},

urldate = {2022-11-10},

booktitle = {2022 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC)},

abstract = {We present an end-to-end Reinforcement Learning (RL) framework for robotic manipulation tasks, using a robust and efficient keypoints representation. The proposed method learns keypoints from camera images as the state representation, through a self-supervised autoencoder architecture. The key-points encode the geometric information, as well as the relationship of the tool and target in a compact representation to ensure efficient and robust learning. After keypoints learning, the RL step then learns the robot motion from the extracted keypoints state representation. The keypoints and RL learning processes are entirely done in the simulated environment. We demonstrate the effectiveness of the proposed method on robotic manipulation tasks including grasping and pushing, in different scenarios. We also investigate the generalization capability of the trained model. In addition to the robust keypoints representation, we further apply domain randomization and adversarial training examples to achieve zero-shot sim-to-real transfer in real-world robotic manipulation tasks.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

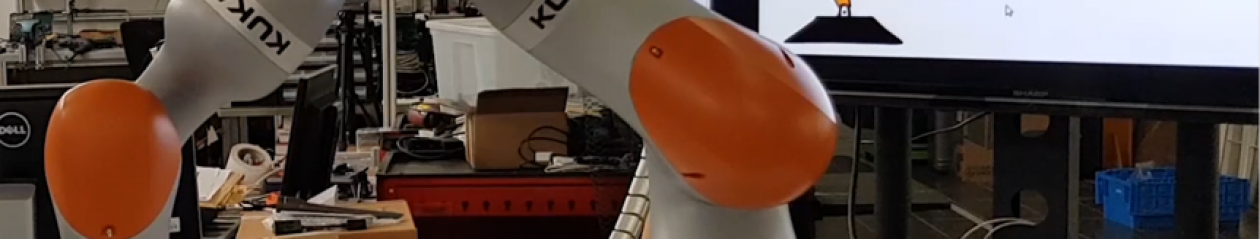

@inproceedings{liang2022tactile,

title = {Tactile-Guided Dynamic Object Planar Manipulation},

author = {Boyuan Liang and Wenyu Liang and Yan Wu},

doi = {10.1109/IROS47612.2022.9981270},

isbn = {978-1-6654-7927-1},

year = {2022},

date = {2022-10-23},

urldate = {2022-10-31},

booktitle = {2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {3203-3209},

publisher = {IEEE},

abstract = {Planar pushing is a fundamental robot manipulation task with most algorithms built upon the quasi-static as-sumption. Under this assumption the end-effector should apply force on the pushed object along the full moving trajectory. This means that the target position must lie in the robot's workspace. To enable a robot to deliver objects outside of its workspace and facilitate faster delivery, the quasi-static assumption should be lifted in favour of dynamical manipulation. In this work, we propose a two-staged data-driven manipulation method to hit an unknown object to reach a target position. This expands the reachability of the manipulated object beyond the robot's workspace. The robot equipped with a tactile sensor first explores for the stable pushing region (SPR) on the given object by using a gain-scheduling PD control with the contact centre estimated to maintain full contact between the object and the end-effector. In the second stage, a learning-based approach is used to generate the impulse the object should receive at the SPR to reach a target sliding distance. The performance of proposed method is evaluated on a KUKA LBR iiwa 14 R820 robot manipulator and a XELA tactile sensor.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{fang2022improving,

title = {Improving Generalization of Reinforcement Learning Using a Bilinear Policy Network},

author = {Fen Fang and Wenyu Liang and Yan Wu and Qianli Xu and Joo-Hwee Lim},

doi = {10.1109/ICIP46576.2022.9897349},

isbn = {978-1-6654-9620-9},

year = {2022},

date = {2022-10-18},

urldate = {2022-10-18},

booktitle = {2022 IEEE International Conference on Image Processing (ICIP)},

pages = {991-995},

publisher = {IEEE},

abstract = {In deep reinforcement learning (DRL), the agent is usually trained on seen environments by optimizing a policy network. However, it is difficult to be generalized to unseen environments properly, even when the environmental variations are insignificant. This is partly because the policy network cannot effectively learn the representation of visual difference that is subtle among highly similar states in the environments. Because a bilinear structured model containing two feature extractors allows pairwise feature interactions in a translation-ally invariant manner which makes it particularly useful for subtle difference recognition among highly similar states, in this work, a bilinear policy network is employed to enhance representation learning, and thus to improve generalization of the DRL. The proposed bilinear policy network is tested on various DRL task, including a control task on path planning for active object detection, and Grid World, an AI game task. The test results show that the generalization of DRL can be improved by the proposed network.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@article{tian2022two,

title = {Two-Phase Motion Planning under Signal Temporal Logic Specifications in Partially Unknown Environments},

author = {Daiying Tian and Hao Fang and Qingkai Yang and Zixuan Guo and Jinqiang Cui and Wenyu Liang and Yan Wu},

doi = {10.1109/TIE.2022.3203752},

issn = {0278-0046},

year = {2022},

date = {2022-09-09},

urldate = {2022-09-09},

journal = {IEEE Transactions on Industrial Electronics},

abstract = {This paper studies the planning problem for robot residing in partially unknown environments under signal temporal logic (STL) specifications, where most existing planning methods using STL rely on a fully known environment. In many practical scenarios, however, robots do not have prior information of all obstacles. In this paper, a novel two-phase planning method, i.e., offline exploration followed by online planning, is proposed to efficiently synthesize paths that satisfy STL tasks. In the offline exploration phase, a Rapidly Exploring Random Tree* (RRT*) is grown from task regions under the guidance of timed transducers, which guarantees that the resultant paths satisfy the task specifications. In the online phase, the path with minimum cost in RRT* is determined when an initial configuration is assigned. This path is then set as the reference of the time elastic band algorithm, which modifies the path until it has no collisions with obstacles. It is shown that the online computational burden is reduced and collisions with unknown obstacles are avoided by using the proposed planning framework. The effectiveness and superiority of the proposed method are demonstrated in simulations and real-world experiments.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@inproceedings{fang2022self,

title = {Self-Supervised Reinforcement Learning for Active Object Detection},

author = {Fen Fang and Wenyu Liang and Yan Wu and Qianli Xu and Joo Hwee Lim},

doi = {10.1109/LRA.2022.3193019},

issn = {2377-3766},

year = {2022},

date = {2022-07-21},

urldate = {2022-10-31},

booktitle = {2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

volume = {7},

number = {4},

pages = {10224-10231},

publisher = {IEEE},

abstract = {Active object detection (AOD) offers significant advantage in expanding the perceptual capacity of a robotics system. AOD is formulated as a sequential action decision process to determine optimal viewpoints to identify objects of interest in a visual scene. While reinforcement learning (RL) has been successfully used to solve many AOD problems, conventional RL methods suffer from (i) sample inefficiency, and (ii) unstable outcome due to inter-dependencies of action type (direction of view change) and action range (step size of view change). To address these issues, we propose a novel self-supervised RL method, which employs self-supervised representations of viewpoints to initialize the policy network, and a self-supervised loss on action range to enhance the network parameter optimization. The output and target pairs of self-supervised learning loss are automatically generated from the policy network online prediction and a range shrinkage algorithm (RSA), respectively. The proposed method is evaluated and benchmarked on two public datasets (T-LESS and AVD) using on-policy and off-policy RL algorithms. The results show that our method enhances detection accuracy and achieves faster convergence on both datasets. By evaluating on a more complex environment with a larger state space (where viewpoints are more densely sampled), our method achieves more robust and stable performance. Our experiment on real robot application scenario to disambiguate similar objects in a cluttered scene has also demonstrated the effectiveness of the proposed method.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{sui2022craft,

title = {CRAFT: Cross-Attentional Flow Transformers for Robust Optical Flow},

author = {Xiuchao Sui and Shaohua Li and Xue Geng and Yan Wu and Xinxing Xu and Yong Liu and Rick Goh and Hongyuan Zhu },

url = {https://openaccess.thecvf.com/content/CVPR2022/papers/Sui_CRAFT_Cross-Attentional_Flow_Transformer_for_Robust_Optical_Flow_CVPR_2022_paper.pdf},

year = {2022},

date = {2022-06-24},

urldate = {2022-06-24},

booktitle = {2022 Conference on Computer Vision and Pattern Recognition (CVPR)},

abstract = {Optical flow estimation aims to find the 2D motion field by identifying corresponding pixels between two images. Despite the tremendous progress of deep learning-based optical flow methods, it remains a challenge to accurately estimate large displacements with motion blur. This is mainly because the correlation volume, the basis of pixel matching, is computed as the dot product of the convolutional features of the two images. The locality of convolutional features makes the computed correlations susceptible to various noises. On large displacements with motion blur, noisy correlations could cause severe errors in the estimated flow. To overcome this challenge, we propose a new architecture" CRoss-Attentional Flow Transformer"(CRAFT), aiming to revitalize the correlation volume computation. In CRAFT, a Semantic Smoothing Transformer layer transforms the features of one frame, making them more global and semantically stable. In addition, the dot-product correlations are replaced with transformer Cross-Frame Attention. This layer filters out feature noises through the Query and Key projections, and computes more accurate correlations. On Sintel (Final) and KITTI (foreground) benchmarks, CRAFT has achieved new state-of-the-art performance. Moreover, to test the robustness of different models on large motions, we designed an image shifting attack that shifts input images to generate large artificial motions. Under this attack, CRAFT performs much more robustly than two representative methods, RAFT and GMA. The code of CRAFT is is available at https://github. com/askerlee/craft.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@article{diao2022superpixel,

title = {Superpixel-based attention graph neural network for semantic segmentation in aerial images},

author = {Qi Diao and Yaping Dai and Ce Zhang and Yan Wu and Xiaoxue Feng and Feng Pan},

doi = {10.3390/rs14020305},

year = {2022},

date = {2022-01-10},

urldate = {2022-01-10},

journal = {Remote Sensing},

volume = {14},

number = {2},

abstract = {Semantic segmentation is one of the significant tasks in understanding aerial images with high spatial resolution. Recently, Graph Neural Network (GNN) and attention mechanism have achieved excellent performance in semantic segmentation tasks in general images and been applied to aerial images. In this paper, we propose a novel Superpixel-based Attention Graph Neural Network (SAGNN) for semantic segmentation of high spatial resolution aerial images. A K-Nearest Neighbor (KNN) graph is constructed from our network for each image, where each node corresponds to a superpixel in the image and is associated with a hidden representation vector. On this basis, the initialization of the hidden representation vector is the appearance feature extracted by a unary Convolutional Neural Network (CNN) from the image. Moreover, relying on the attention mechanism and recursive functions, each node can update its hidden representation according to the current state and the incoming information from its neighbors. The final representation of each node is used to predict the semantic class of each superpixel. The attention mechanism enables graph nodes to differentially aggregate neighbor information, which can extract higher-quality features. Furthermore, the superpixels not only save computational resources, but also maintain object boundary to achieve more accurate predictions. The accuracy of our model on the Potsdam and Vaihingen public datasets exceeds all benchmark approaches, reaching 90.23% and 89.32%, respectively. },

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2021

@article{liang2021parameterized,

title = {Parameterized Particle Filtering for Tactile-based Simultaneous Pose and Shape Estimation},

author = {Boyuan Liang and Wenyu Liang and Yan Wu},

url = {https://ieeexplore.ieee.org/document/9667178},

doi = {10.1109/LRA.2021.3139381},

issn = {2377-3766},

year = {2021},

date = {2021-12-31},

urldate = {2021-12-31},

journal = {IEEE Robotics and Automation Letters (RA-L)},

volume = {7},

number = {2},

pages = {1270-1277},

abstract = {Object state and shape estimation is essential in many robotic manipulation tasks (e.g., in-hand manipulation, insertion). While such estimation is typically relied on visual perception, for tasks to be carried out in a vision-degraded or vision-denied environment, haptics becomes the reliable source of perception. In this work, we propose the use of parameterized particle filtering to estimate object pose and shape in 3D space using tactile feedback. This approach is able to estimate with high accuracy using contact information of the object with a collision surface from a rough initial estimation. In comparison to conventional particle filtering, this approach significantly reduces the number of particles required for a satisfactory estimation, making it applicable for pose and shape estimation, where the number of degrees of freedom is high or even uncertain. Moreover, the proposed method can automatically choose the fastest-convergent contact action during the pose estimation stage to shorten the time required. A set of experiments in both simulation and on a real-world robot have been conducted to validate the proposed method and compare against the state-of-the-art approach in the literature. Results from both sets of experiments show that the proposed method can determine the pose and shape of the objects with very high accuracy within a small number of iterations.},

note = {also accepted by ICRA 2022},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@conference{towards2021gauthier,

title = {Towards a Programming-Free Robotic System for Assembly Tasks Using Intuitive Interactions},

author = {Nicolas Gauthier and Wenyu Liang and Qianli Xu and Fen Fang and Liyuan Li and Ruihan Gao and Yan Wu and Joo Hwee Lim},

editor = {Haizhou Li and Shuzhi Sam Ge and Yan Wu and Agnieszka Wykowska and Hongsheng He and Xiaorui Liu and Dongyu Li and Jairo Perez-Osorio},

url = {https://link.springer.com/chapter/10.1007/978-3-030-90525-5_18

http://yan-wu.com/wp-content/uploads/2021/11/gauthier2021towards.pdf},

doi = {10.1007/978-3-030-90525-5_18},

isbn = {978-3-030-90525-5},

year = {2021},

date = {2021-11-02},

booktitle = {The 13th International Conference on Social Robotics (ICSR 2021)},

volume = {13086},

pages = {203-215},

publisher = {Springer},

series = {Lecture Notes in Computer Science},

abstract = {Although industrial robots are successfully deployed in many assembly processes, high-mix, low-volume applications are still difficult to automate, as they involve small batches of frequently changing parts. Setting up a robotic system for these tasks requires repeated re-programming by expert users, incurring extra time and costs. In this paper, we present a solution which enables a robot to learn new objects and new tasks from non-expert users without the need for programming. The use case presented here is the assembly of a gearbox mechanism. In the proposed solution, first, the robot can autonomously register new objects using a visual exploration routine, and train a deep learning model for object detection accordingly. Secondly, the user can teach new tasks to the system via visual demonstration in a natural manner. Finally, using multimodal perception from RGB-D (color and depth) cameras and a tactile sensor, the robot can execute the taught tasks with adaptation to changing configurations. Depending on the task requirements, it can also activate human-robot collaboration capabilities. In summary, these three main modules enable any non-expert user to configure a robot for new applications in a fast and intuitive way.},

note = {Best Presentation Award},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

@workshop{marchesi2021consequences,

title = { The consequences of being human-like – an intercultural study},

author = {Serena Marchesi and Davide, De Tommaso and Jairo Perez-Osorio and Ming Min Tan and Ching Ru Hoh and Yan Wu and Agnieszka Wykowska},

year = {2021},

date = {2021-10-01},

booktitle = {IROS 2021 Workshop on Human-like Behavior and Cognition in Robots},

keywords = {},

pubstate = {published},

tppubtype = {workshop}

}

@inproceedings{gao2021explainability,

title = {On Explainability and Sensor-Adaptability of a Robot Tactile Texture Representation Using a Two-Stage Recurrent Networks},

author = {Ruihan Gao and Tian Tian and Zhiping Lin and Yan Wu},

year = {2021},

date = {2021-09-27},

booktitle = {2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) },

abstract = {The ability to simultaneously distinguish objects, materials, and their associated physical properties is one fundamental function of the sense of touch. Recent advances in the development of tactile sensors and machine learning techniques allow more accurate and complex modelling of robotic tactile sensations. However, many state-of-the-art (SotA) approaches focus solely on constructing black-box models to achieve ever higher classification accuracy and fail to adapt across sensors with unique spatial-temporal data formats. In this work, we propose an Explainable and Sensor-Adaptable Recurrent Networks (ExSARN) model for tactile texture representation. The ExSARN model consists of a two-stage recurrent networks fed by a sensor-specific header network. The first stage recurrent network emulates our human touch receptors and decouples sensor-specific tactile sensations into different frequency response bands, while the second stage codes the overall temporal signature as a variational recurrent autoencoder. We infuse the latent representation with ternary labels to qualitatively represent texture properties (e.g. roughness and stiffness), which facilitates representation learning and provide explainability to the latent space. The ExSARN model is tested on texture datasets collected with two different tactile sensors. Our results show that the proposed model not only achieves higher accuracy, but also provides adaptability across sensors with different sampling frequencies and data formats. The addition of the crudely obtained qualitative property labels offers a practical approach to enhance the interpretability of the latent space, facilitate property inference on unseen materials, and improve the overall performance of the model.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{xu2021efficient,

title = {Towards Efficient Multiview Object Detection with Adaptive Action Prediction},

author = {Qianli Xu and Fen Fang and Nicolas Gauthier and Wenyu Liang and Yan Wu and Liyuan Li and Joo Hwee Lim },

url = {https://ieeexplore.ieee.org/document/9561388},

doi = {10.1109/ICRA48506.2021.9561388},

isbn = {978-1-7281-9077-8},

year = {2021},

date = {2021-05-31},

booktitle = {2021 IEEE International Conference on Robotics and Automation (ICRA)},

publisher = {IEEE},

abstract = {Active vision is a desirable perceptual feature for robots. Existing approaches usually make strong assumptions about the task and environment, thus are less robust and efficient. This study proposes an adaptive view planning approach to boost the efficiency and robustness of active object detection. We formulate the multi-object detection task as an active multiview object detection problem given the initial location of the objects. Next, we propose a novel adaptive action prediction (A2P) method built on a deep Q-learning network with a dueling architecture. The A2P method is able to perform view planning based on visual information of multiple objects; and adjust action ranges according to the task status. Evaluated on the AVD dataset, A2P leads to 21.9% increase in detection accuracy in unfamiliar environments, while improving efficiency by 22.7%. On the T-LESS dataset, multi-object detection boosts efficiency by more than 30% while achieving equivalent detection accuracy.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{liang2021dexterous,

title = {Dexterous Manoeuvre through Touch in a Cluttered Scene},

author = {Wenyu Liang and Qinyuan Ren and Xiaoqiao Chen and Junli Gao and Yan Wu},

url = {http://yan-wu.com/wp-content/uploads/2021/03/liang2021dexterous.pdf

https://ieeexplore.ieee.org/document/9562061},

doi = {10.1109/ICRA48506.2021.9562061},

isbn = {978-1-7281-9077-8},

year = {2021},

date = {2021-05-31},

booktitle = {2021 IEEE International Conference on Robotics and Automation (ICRA)},

publisher = {IEEE},

abstract = {Manipulation in a densely cluttered environment creates complex challenges in perception to close the control loop, many of which are due to the sophisticated physical interaction between the environment and the manipulator. Drawing from biological sensory-motor control, to handle the task in such a scenario, tactile sensing can be used to provide an additional dimension of the rich contact information from the interaction for decision making and action selection to manoeuvre towards a target. In this paper, a new tactile-based motion planning and control framework based on bioinspiration is proposed and developed for a robot manipulator to manoeuvre in a cluttered environment. An iterative two-stage machine learning approach is used in this framework: an autoencoder is used to extract important cues from tactile sensory readings while a reinforcement learning technique is used to generate optimal motion sequence to efficiently reach the given target. The framework is implemented on a KUKA LBR iiwa robot mounted with a SynTouch BioTac tactile sensor and tested with real-life experiments. The results show that the system is able to move the end-effector through the cluttered environment to reach the target effectively.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{xu2021tailor,

title = {TAILOR: Teaching with Active and Incremental Learning for Object Registration},

author = {Qianli Xu and Nicolas Gauthier and Wenyu Liang and Fen Fang and Hui Li Tan and Ying Sun and Yan Wu and Liyuan Li and Joo Hwee Lim},

url = {http://yan-wu.com/wp-content/uploads/2021/03/xu2021tailor.pdf

http://yan-wu.com/wp-content/uploads/2021/03/xu2021tailor_poster.pdf

https://twitter.com/RealAAAI/status/1364017094086389760

https://ojs.aaai.org/index.php/AAAI/article/view/18031},

year = {2021},

date = {2021-05-01},

booktitle = {Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI)},

volume = {35},

number = {18},

pages = {16120-16123},

publisher = {AAAI},

abstract = {When a robot is deployed to a new task, it often needs to be trained to detect novel objects. Using deep learning based detector, one has to collect and annotate a large number of images of the novel objects for training, which is labor intensive, time consuming and lack of scalability. We present TAILOR - a method and system for object registration with active and incremental learning. When instructed by a human teacher to register an object, TAILOR is able to automatically select viewpoints to capture informative images by actively exploring viewpoints, and employ a fast incremental learning algorithm to learn new objects without potential forgetting of previously learned objects. We demonstrate the effectiveness of our method with a KUKA robot to learn novel objects used in a real-world gearbox assembly task through natural interactions.},

note = {AAAI'21 Best Demo Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@article{li2021time,

title = {On Time-Synchronized Stability and Control},

author = {Dongyu Li and Haoyong Yu and Keng Peng Tee and Yan Wu and Shuzhi Sam Ge and Tong Heng Lee},

url = {https://ieeexplore.ieee.org/document/9340609

http://yan-wu.com/wp-content/uploads/2021/02/li2021time.pdf},

doi = {10.1109/TSMC.2021.3050183},

issn = {2168-2216},

year = {2021},

date = {2021-01-31},

journal = {IEEE Transactions on Systems, Man, and Cybernetics: Systems},

pages = {1-14},

abstract = {Previous research on finite-time control focuses on forcing a system state (vector) to converge within a certain time moment, regardless of how each state element converges. In the present work, we introduce a control problem with unique finite/fixed-time stability considerations, namely time-synchronized stability (TSS), where at the same time, all the system state elements converge to the origin, and fixed-TSS, where the upper bound of the synchronized settling time is invariant with any initial state. Accordingly, sufficient conditions for (fixed-) TSS are presented. On the basis of these formulations of the time-synchronized convergence property, the classical sign function, and also a norm-normalized sign function, are first revisited. Then in terms of this notion of TSS, we investigate their differences with applications in control system design for first-order systems (to illustrate the key concepts and outcomes), paying special attention to their convergence performance. It is found that while both these sign functions contribute to system stability, nevertheless an important result can be drawn that norm-normalized sign functions help a system to additionally achieve TSS. Furthermore, we propose a fixed-time-synchronized sliding-mode controller for second-order systems; and we also consider the important related matters of singularity avoidance there. Finally, numerical simulations are conducted to present the (fixed-) time-synchronized features attained; and further explorations of the merits of the proposed (fixed-) TSS are described.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

@inproceedings{6d2020cheng,

title = {6D Pose Estimation with Correlation Fusion},

author = {Yi Cheng and Hongyuan Zhu and Ying Sun and Cihan Acar and Wei Jing and Yan Wu and Liyuan Li and Cheston Tan and Joo Hwee Lim},

url = {https://arxiv.org/abs/1909.12936

https://ieeexplore.ieee.org/document/9412238},

doi = {10.1109/ICPR48806.2021.9412238},

isbn = {978-1-7281-8808-9},

year = {2021},

date = {2021-01-15},

booktitle = {25th International Conference on Pattern Recognition (ICPR)},

pages = {2988-2994},

address = {Milan, Italy},

abstract = {6D object pose estimation is widely applied in robotic tasks such as grasping and manipulation. Prior methods using RGB-only images are vulnerable to heavy occlusion and poor illumination, so it is important to complement them with depth information. However, existing methods using RGB-D data don't adequately exploit consistent and complementary information between two modalities. In this paper, we present a novel method to effectively consider the correlation within and across RGB and depth modalities with attention mechanism to learn discriminative multi-modal features. Then, effective fusion strategies for intra- and inter-correlation modules are explored to ensure efficient information flow between RGB and depth. To the best of our knowledge, this is the first work to explore effective intra- and inter-modality fusion in 6D pose estimation and experimental results show that our method can help achieve the state-of-the-art performance on LineMOD and YCB-Video datasets as well as benefit robot grasping task.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2020

@inproceedings{gao2020supervised,

title = {Supervised Autoencoder Joint Learning on Heterogeneous Tactile Sensory Data: Improving Material Classification Performance},

author = {Ruihan Gao and Tasbolat Taunyazov and Zhiping Lin and Yan Wu},

url = {http://yan-wu.com/wp-content/uploads/2020/08/gao2020supervised.pdf

https://ieeexplore.ieee.org/document/9341111},

doi = {10.1109/IROS45743.2020.9341111},

isbn = {978-1-7281-6212-6},

year = {2020},

date = {2020-10-31},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

publisher = {IEEE},

address = {Las Vegas, USA},

abstract = {The sense of touch is an essential sensing modality for a robot to interact with the environment as it provides rich and multimodal sensory information upon contact. It enriches the perceptual understanding of the environment and closes the loop for action generation. One fundamental area of perception that touch dominates over other sensing modalities, is the understanding of the materials that it interacts with, for example, glass versus plastic. However, unlike the senses of vision and audition which have standardized data format, the format for tactile data is vastly dictated by the sensor manufacturer, which makes it difficult for large-scale learning on data collected from heterogeneous sensors, limiting the usefulness of publicly available tactile datasets. This paper investigates the joint learnability of data collected from two tactile sensors performing a touch sequence on some common materials. We propose a supervised recurrent autoencoder framework to perform joint material classification task to improve the training effectiveness. The framework is implemented and tested on the two sets of tactile data collected in sliding motion on 20 material textures using the iCub RoboSkin tactile sensors and the SynTouch BioTac sensor respectively. Our results show that the learning efficiency and accuracy improve for both datasets through the joint learning as compared to independent dataset training. This suggests the usefulness for large-scale open tactile datasets sharing with different sensors.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{liang2020robust,

title = {Robust Force Tracking Impedance Control of an Ultrasonic Motor-actuated End-effector in a Soft Environment},

author = {Wenyu Liang and Zhao Feng and Yan Wu and Junli Gao and Qinyuan Ren and Tong Heng Lee},

url = {http://yan-wu.com/wp-content/uploads/2020/08/liang2020robust.pdf

https://ieeexplore.ieee.org/document/9340717},

doi = {10.1109/IROS45743.2020.9340717},

year = {2020},

date = {2020-10-31},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

publisher = {IEEE},

address = {Las Vegas, USA},

abstract = {Robotic systems are increasingly required not only to generate precise motions to complete their tasks but also to handle the interactions with the environment or human. Significantly, soft interaction brings great challenges on the force control due to the nonlinear, viscoelastic and inhomogeneous properties of the soft environment. In this paper, a robust impedance control scheme utilizing integral backstepping technology and integral terminal sliding mode control is proposed to achieve force tracking for an ultrasonic motor-actuated end-effector in a soft environment. In particular, the steady-state performance of the target impedance while in contact with soft environment is derived and analyzed with the nonlinear Hunt-Crossley model. Finally, the dynamic force tracking performance of the proposed control scheme is verified via several experiments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{taunyazov2020fast,

title = {Fast Texture Classification Using Tactile Neural Coding and Spiking Neural Network},

author = {Tasbolat Taunyazov and Yansong Chua and Ruihan Gao and Harold Soh and Yan Wu },

url = {http://yan-wu.com/wp-content/uploads/2020/08/taunyazov2020fast.pdf

https://ieeexplore.ieee.org/document/9340693},

doi = {10.1109/IROS45743.2020.9340693},

year = {2020},

date = {2020-10-31},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

journal = {2020},

publisher = {IEEE},

address = {Las Vegas, USA},

abstract = {Touch is arguably the most important sensing modality in physical interactions. However, tactile sensing has been largely under-explored in robotics applications owing to the complexity in making perceptual inferences until the recent advancements in machine learning or deep learning in particular. Touch perception is strongly influenced by both its temporal dimension similar to audition and its spatial dimension similar to vision. While spatial cues can be learned episodically, temporal cues compete against the system's response/reaction time to provide accurate inferences. In this paper, we propose a fast tactile-based texture classification framework which makes use of the spiking neural network to learn from the neural coding of the conventional tactile sensor readings. The framework is implemented and tested on two independent tactile datasets collected in sliding motion on 20 material textures. Our results show that the framework is able to make much more accurate inferences ahead of time as compared to that by the state-of-the-art learning approaches.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{jing2020multi,

title = {Multi-UAV Coverage Path Planning for the Inspection of Large and Complex Structures via Path Primitive Sampling},

author = {Wei Jing and Di Deng and Yan Wu and Kenji Shimada},

url = {http://yan-wu.com/wp-content/uploads/2020/08/jing2020multi.pdf

https://ieeexplore.ieee.org/document/9341089},

doi = {10.1109/IROS45743.2020.9341089},

year = {2020},

date = {2020-10-31},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

publisher = {IEEE},

address = {Las Vegas, USA},

abstract = {We present a multi-UAV cpp framework for the inspection of large-scale, complex 3D structures. In the proposed sampling-based coverage path planning method, we formulate the multi-UAV inspection applications as a multi-agent coverage path planning problem. By combining two NP-hard problems: scp and vrp, a sc-vrp is formulated and subsequently solved by a modified brkga with novel, efficient encoding strategies and local improvement heuristics. We test our proposed method for several complex 3D structures with the 3D model extracted from OpenStreetMap. The proposed method outperforms previous methods, by reducing the length of the planned inspection path by up to 48%.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{cai2020inferring,

title = {Inferring the Geometric Nullspace of Robot Skills from Human Demonstrations},

author = {Caixia Cai and Ying Siu Liang and Nikhil Somani and Yan Wu},

url = {https://ieeexplore.ieee.org/document/9197174

https://yan-wu.com/wp-content/uploads/2020/05/cai2020inferring.pdf},

doi = {10.1109/ICRA40945.2020.9197174},

issn = {2577-087X},

year = {2020},

date = {2020-05-31},

booktitle = {2020 IEEE International Conference on Robotics and Automation (ICRA)},

pages = {7668-7675},

publisher = {IEEE},

address = {Paris, France},

abstract = {In this paper we present a framework to learn skills from human demonstrations in the form of geometric nullspaces, which can be executed using a robot. We collect data of human demonstrations, fit geometric nullspaces to them, and also infer their corresponding geometric constraint models. These geometric constraints provide a powerful mathematical model as well as an intuitive representation of the skill in terms of the involved objects. To execute the skill using a robot, we combine this geometric skill description with the robot's kinematics and other environmental constraints, from which poses can be sampled for the robot's execution. The result of our framework is a system that takes the human demonstrations as input, learns the underlying skill model, and executes the learnt skill with different robots in different dynamic environments. We evaluate our approach on a simulated industrial robot, and execute the final task on the iCub humanoid robot.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2019

@inproceedings{chi2019motion,

title = {Motion Control of a Soft Circular Crawling Robot via Iterative Learning Control},

author = {Haozhen Chi and Xuefang Li and Wenyu Liang and Yan Wu and Qinyuan Ren},

url = {https://ieeexplore.ieee.org/document/9029234

https://yan-wu.com/wp-content/uploads/2020/05/chi2019motion.pdf},

doi = {10.1109/CDC40024.2019.9029234},

isbn = {978-1-7281-1398-2},

year = {2019},

date = {2019-12-13},

booktitle = {2019 IEEE 58th Conference on Decision and Control (CDC)},

pages = {6524-6529},

publisher = {IEEE},

address = {Nice, France},

abstract = {Soft robots have recently attracted widespread attention due to their abilities to work effectively in unstructured environments. As an actuation technology of soft robots, dielectric elastomer actuators (DEAs) exhibit many fantastic attributes such as large strain and high energy density. However, due to nonlinear electromechanical coupling, it is challenging to model a DEA accurately, and further it is difficult to control a DEA-based soft robot. This work studies a novel DEA-based soft circular crawling robot. The kinematics of the soft robot is explored and a knowledge-based model is established to expedite the controller design. An iterative learning control (ILC) method then is applied to control the soft robot. By employing ILC, the performance of the robot motion trajectory tracking can be improved significantly without using a perfect model. Finally, several numerical studies are conducted to illustrate the effectiveness of the ILC.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{wang2019efficient,

title = {Efficient Robotic Task Generalization Using Deep Model Fusion Reinforcement Learning},

author = {Tianying Wang and Hao Zhang and Wei Qi Toh and Hongyuan Zhu and Cheston Tan and Yan Wu and Yong Liu and Wei Jing},

url = {https://ieeexplore.ieee.org/document/8961391

https://www.yan-wu.com/wp-content/uploads/2020/05/wang2019efficient.pdf},

doi = {10.1109/ROBIO49542.2019.8961391},

isbn = {978-1-7281-6321-5},

year = {2019},

date = {2019-12-08},

booktitle = {2019 IEEE International Conference on Robotics and Biomimetics (ROBIO)},

pages = {148-153},

publisher = {IEEE},

address = {Dali, China},

abstract = {Learning-based methods have been used to program robotic tasks in recent years. However, extensive training is usually required not only for the initial task learning but also for generalizing the learned model to the same task but in different environments. In this paper, we propose a novel Deep Reinforcement Learning algorithm for efficient task generalization and environment adaptation in the robotic task learning problem. The proposed method is able to efficiently generalize the previously learned task by model fusion to solve the environment adaptation problem. The proposed Deep Model Fusion (DMF) method reuses and combines the previously trained model to improve the learning efficiency and results. Besides, we also introduce a Multi-objective Guided Reward (MGR) shaping technique to further improve training efficiency. The proposed method was benchmarked with previous methods in various environments to validate its effectiveness.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

@inproceedings{pang2019modelling,

title = {Modeling and Control of A Soft Circular Crawling Robot},

author = {Jiangnan Pang and Yibo Shao and Haozhen Chi and Yan Wu},

url = {https://ieeexplore.ieee.org/document/8927474

https://www.yan-wu.com/wp-content/uploads/2020/05/pang2019modeling.pdf},

doi = {10.1109/IECON.2019.8927474},

isbn = {978-1-7281-4878-6},

year = {2019},

date = {2019-10-17},

booktitle = {The 45th Annual Conference of the IEEE Industrial Electronics Society (IECON)},

volume = {1},

pages = {5243-5248},

publisher = {IEEE},

address = {Lisbon, Portugal},

abstract = {Soft robots have exhibited significant advantages compared to conventional rigid robots due to the high-energy density and strong environmental compliance. Among the soft materials explored for soft robots, dielectric elastomers (DEs) stand out with the muscle-like actuation behaviors. However, recently, modeling and control of a DE-based soft robot still remain a challenging because of the nonlinearity and viscoelasticity of DE actuators. This paper focuses on the design, modeling and control of a soft circular robot which is able to achieve a 2D motion. To facilitate the design of a motion controller, a dynamic model of the robot is investigated through experimental identification. Based on the model, a feedforward plus feedback control scheme is adopted for the motion control of the robot. Finally, both simulations and experiments are conducted to verify the effectiveness of the proposed model and control approach.},